Overview

This project explores the social implications of generative AI technologies, particularly image-based technologies. It focusses on the ability of these technologies to generate images that look like people. These images can either represent a synthetic person, or they can represent a real person. Colloquially, these are known as ‘deep fakes’.

While many studies have examined the veracity of these images (a ‘deep fake detector’), this project instead addresses the issue of likeness detection. If it is possible to recognize the specific individual in an image, it can be said that the image contains the likeness of that person.

Following from this, this project raises the question: does the model that created the image also contains the likeness of the person? This question establishes the basis for governing spaces where generative images proliferate, where the likeness becomes a form of property which can now be traded, exchanged and licensed inside a model trained on images of a person.

In the absence of appropriate legal and regulatory frameworks, the communities of practice around the generation of synthetic images tend to self-govern on these issues of image training. The governance of these communities of practice suggest evolving norms about the appropriate training or display of a likeness.

This project undertakes a two-stage approach to exploring this issue.

- A participant observation study of the online communities of practice surrounding the creation of synthetic images, with the primary goal to understand and catalogue the themes and discourses surrounding the use of a proper likeness.

- An online experiment using synthetic images trained on volunteers, seeking to assess the perception of stranger faces in deepfakes versus synthetic text-to-image models.

Insights from this work will be able to provide clear guidance to policymakers in an area currently without adequate legal and regulatory frameworks. It will provide particular insights on the distinction between ‘mere’ misinformation, and what constitutes misrepresentation of specific likenesses in synthetic images.

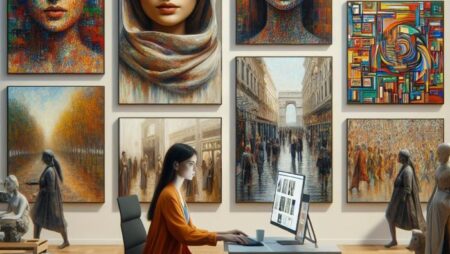

Photo:

This image was generated using Stable Diffusion XL using a custom model derived from the base SDXL 1.0 model trained on the likeness of Bernie Hogan by himself