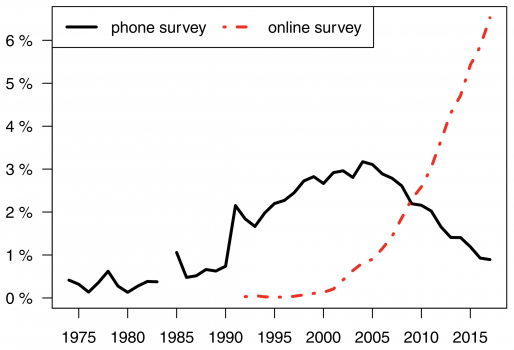

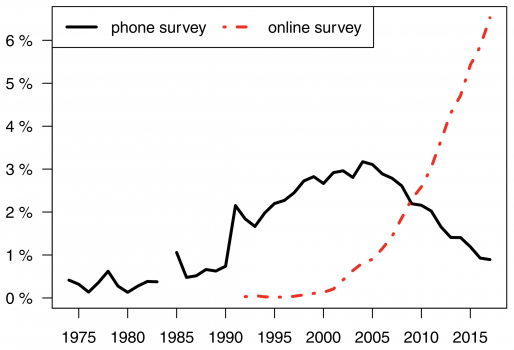

Survey research used to be the province of skilled specialists who often dedicated their entire careers to it. But with the rise of inexpensive online panels, tools like SurveyMonkey, and social media like Facebook, any graduate student or think-tank researcher can now conduct a survey themselves. This change is reflected in the numbers of published research articles that use different survey methods: telephone surveys are becoming relatively rare, while online surveys are exploding in popularity.

Phone and online survey publications as percentage of all survey publications in the Social Sciences Citation Index. Source: Lehdonvirta et al. 2020.

However, most of the literature on survey methods still focuses on elaborate phone- and mail-based probability surveys, where respondents are randomly drawn from a population register or similar. Cheap and fast non-probability online surveys, where respondents are recruited via social media ads, blog posts, online panels, and similar have received a lot less attention. Our new article, “Social Media, Web, and Panel Surveys: Using Non‐Probability Samples in Social and Policy Research“, addresses this gap.

In this blog post I draw some practical advice from the article for would-be survey researchers. As you will see, there are two basic approaches to recruiting survey respondents online, each with its pros and cons.

Recruiting respondents by offering compensation

A typical example of compensation-based recruitment is paying a commercial survey company for access to their online panel of respondents. The survey company provides compensation to the respondents either directly in cash or via loyalty points, discount codes or similar. Paying people to respond to your survey via a platform like Amazon Mechanical Turk (MTurk) or Prolific is also a form of compensation-based recruitment.

Compensation-based online surveys are much cheaper to run than mail surveys or phone surveys, but they still come with a cost. Depending on what kind of respondents you are looking for, you can expect to pay anything between $1 to $10 per respondent for a 20-minute survey.

The advantage of compensation-based recruitment is that you can usually influence what kind of respondents you are getting in terms of demographics. With a commercial online panel you can for instance request a sample of respondents whose gender and age distributions correspond with the national gender and age distributions.

Still, a sample like this is not the same as a random sample drawn from the national population. In our study, a sample of respondents from a commercial online panel, which mirrored the national population in terms of demographics, were four times as likely to report being cyberharassment victims than the national average. One possible cause is that in a sample like this the members are all avid Internet users, so they are likely to report higher incidences of anything Internet-related than the general population.

Another bias to watch out for in compensation-based recruitment is what we call economic self-selection. People who respond to paid surveys are more likely to be those who are in need of some cash. For instance, several studies have found that U.S. respondents recruited via MTurk are poorer than average U.S. adults. This is something to watch out for if you are trying to use a compensation-based survey to study something where people’s economic situation makes a difference.

Recruiting respondents by grabbing their attention

Advertising your survey on Facebook or inserting the survey link into a related blog post or news article are examples of attention-based recruitment. The idea is to grab as many people’s attention as you can and then get them to click through to the survey and fill it in. An advantage of this approach is that it can be very cheap or even free, which is great if you don’t have a big budget for your survey.

However, this approach results in a strong self-selection bias in your sample. The respondents are not just random Internet users; they are people who are interested in your survey topic for some reason, perhaps because they have relevant personal experiences. We found that people who responded to our survey on cyberharassment via a Facebook ad or a related news article were six times as likely to report having experienced cyberharassment as the national average. We call this topical self-selection. Topical self-selection means that attention-based recruitment is not a viable option if the purpose of your survey is to find out the national average of something.

But if your aim is to survey as many members as possible of a subpopulation like cyberharassment victims, then topical self-selection can actually be handy. With a smaller total sample size you get a larger share of the subpopulation members that you are interested in. Just remember that you can’t formally generalize from your sample to all cyberharassment victims, because it’s not a random sample of them. But you could for instance make tentative conclusions about differences between female and male members. We found that the cyberharassment victims who discovered our survey via attention-grabbing channels were not substantially different from those whom we recruited through the panel company, supporting the validity of this approach.

Conclusion

Which online survey approach is correct thus depends on the aims of your study. And for some purposes, such as producing high-quality estimates of how common something is in the general population, old-fashioned probability surveys run by survey experts are still unbeatable. Check out our article for more details and advice:

Lehdonvirta, V., Oksanen, A., Räsänen, P., & Blank, G. (2020) Social Media, Web, and Panel Surveys: Using Non‐Probability Samples in Social and Policy Research. Policy & Internet.